手势识别及特征检测( Gesture and Gesture Landmark Detection)

Python 基础

识别基础知识

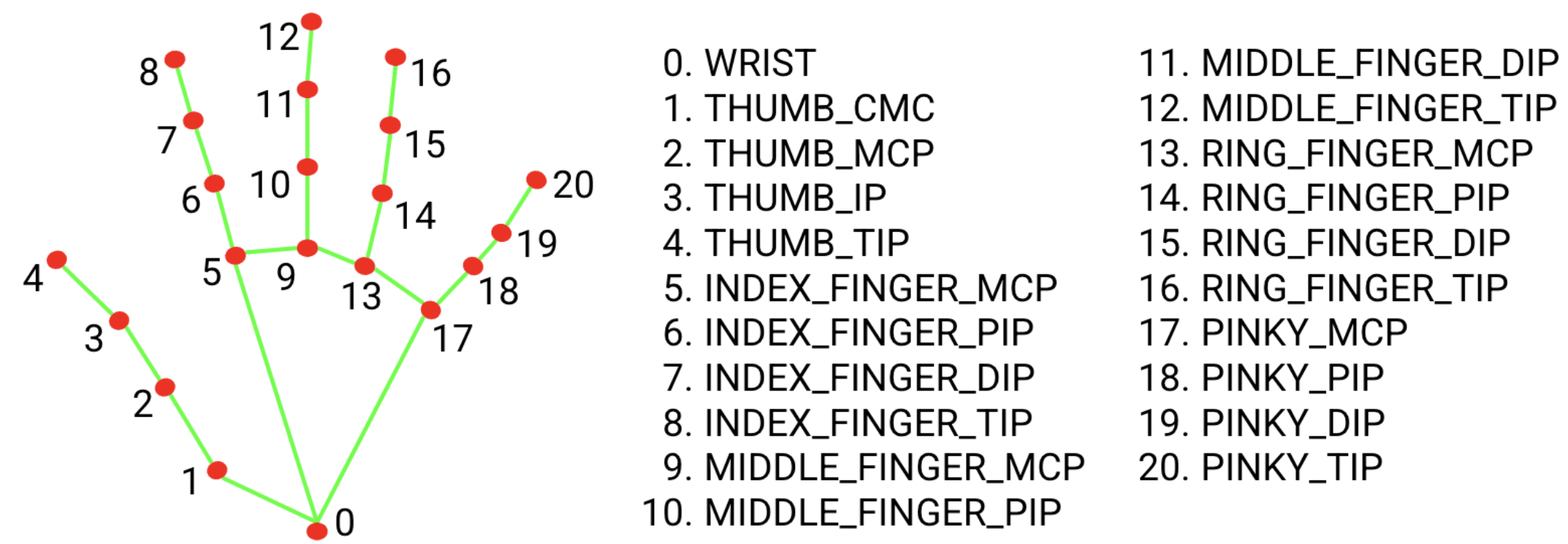

手势节点说明

将手的关节拆分成 21 个关键节点

将手的关节拆分成 21 个关键节点

手势检测说明

坐标说明

在识别结果当中,会给出 21 个节点的三维坐标,三维坐标按两个角度给出

一个是做了图像归一化(Normalized Image)

一个是不做图像归一化

手势状态(Category)说明

0 - Unrecognized gesture, label: Unknown

1 - Closed fist, label: Closed_Fist

2 - Open palm, label: Open_Palm

3 - Pointing up, label: Pointing_Up

4 - Thumbs down, label: Thumb_Down

5 - Thumbs up, label: Thumb_Up

6 - Victory, label: Victory

7 - Love, label: ILoveYou

识别代码流程

- 导入 识别模型

- 导入 需要识别的目标文件(图片)

- 进行 识别

- 处理 识别结果

识别结果样例

GestureRecognizerResult(

(gestures = [

[

Category(

(index = -1),

(score = 0.7730692625045776),

(display_name = ""),

(category_name = "Thumb_Down")

),

],

]),

(handedness = [

[

Category(

(index = 0),

(score = 0.9903181195259094),

(display_name = "Right"),

(category_name = "Right")

),

],

]),

(hand_landmarks = [

[

NormalizedLandmark( // #1

(x = 0.37759217619895935),

(y = 0.4484555721282959),

(z = -7.660036089873756e-7),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #2

(x = 0.456604540348053),

(y = 0.5906896591186523),

(z = -0.1227630078792572),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #3

(x = 0.5610467195510864),

(y = 0.709786593914032),

(z = -0.2168498933315277),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #4

(x = 0.6086992621421814),

(y = 0.8151221871376038),

(z = -0.2963743209838867),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #5

(x = 0.637844443321228),

(y = 0.908831775188446),

(z = -0.357928603887558),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #6

(x = 0.890494167804718),

(y = 0.6001472473144531),

(z = -0.20001022517681122),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #7

(x = 0.7954045534133911),

(y = 0.6598713397979736),

(z = -0.3040156066417694),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #8

(x = 0.6656860709190369),

(y = 0.6365773677825928),

(z = -0.3355799913406372),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #9

(x = 0.6671782732009888),

(y = 0.6085968017578125),

(z = -0.35448724031448364),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #10

(x = 0.8986698389053345),

(y = 0.5096369385719299),

(z = -0.1952798068523407),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #11

(x = 0.7527908682823181),

(y = 0.5814691781997681),

(z = -0.29371270537376404),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #12

(x = 0.6129770278930664),

(y = 0.5601966381072998),

(z = -0.30048179626464844),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #13

(x = 0.6546463966369629),

(y = 0.541213870048523),

(z = -0.30734455585479736),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #14

(x = 0.8554786443710327),

(y = 0.42796140909194946),

(z = -0.20077751576900482),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #15

(x = 0.7269747853279114),

(y = 0.4955262541770935),

(z = -0.2942975163459778),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #16

(x = 0.609464704990387),

(y = 0.4916495382785797),

(z = -0.26330289244651794),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #17

(x = 0.6465416550636292),

(y = 0.47868776321411133),

(z = -0.24250465631484985),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #18

(x = 0.7972955703735352),

(y = 0.355774462223053),

(z = -0.21797706186771393),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #19

(x = 0.6930341720581055),

(y = 0.400363564491272),

(z = -0.2792198359966278),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #20

(x = 0.6115851402282715),

(y = 0.41218101978302),

(z = -0.2648606598377228),

(visibility = 0.0),

(presence = 0.0)

),

NormalizedLandmark( // #21

(x = 0.6312040090560913),

(y = 0.400348961353302),

(z = -0.2525957226753235),

(visibility = 0.0),

(presence = 0.0)

),

],

]),

(hand_world_landmarks = [

[

Landmark( // #1

(x = -0.0647607073187828),

(y = -0.017019513994455338),

(z = 0.06780295073986053),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #2

(x = -0.05396917834877968),

(y = 0.01182178407907486),

(z = 0.044786952435970306),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #3

(x = -0.04683190956711769),

(y = 0.04057489335536957),

(z = 0.029532015323638916),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #4

(x = -0.03718949109315872),

(y = 0.06816671043634415),

(z = 0.010851293802261353),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #5

(x = -0.028814276680350304),

(y = 0.08923465758562088),

(z = -0.004659995436668396),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #6

(x = 0.00351596437394619),

(y = 0.021648747846484184),

(z = 0.00543522834777832),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #7

(x = -0.009742360562086105),

(y = 0.03096802346408367),

(z = -0.016697056591510773),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #8

(x = -0.02304564043879509),

(y = 0.030055532231926918),

(z = -0.003597669303417206),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #9

(x = -0.025732334703207016),

(y = 0.021427519619464874),

(z = 0.01740020513534546),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #10

(x = 0.005610080435872078),

(y = 0.0008263451745733619),

(z = 0.0033886171877384186),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #11

(x = -0.009612726978957653),

(y = 0.014454616233706474),

(z = -0.02695823833346367),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #12

(x = -0.03230900317430496),

(y = 0.01393263228237629),

(z = -0.014949709177017212),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #13

(x = -0.025038108229637146),

(y = 0.0064987121149897575),

(z = 0.017692938446998596),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #14

(x = 0.0009549870155751705),

(y = -0.015959488227963448),

(z = -0.00305059552192688),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #15

(x = -0.018123731017112732),

(y = -0.005495254881680012),

(z = -0.024450279772281647),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #16

(x = -0.03601652383804321),

(y = -0.0031670834869146347),

(z = -0.00937420129776001),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #17

(x = -0.03027101419866085),

(y = -0.005926121026277542),

(z = 0.017353922128677368),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #18

(x = -0.013136080466210842),

(y = -0.0354243665933609),

(z = -0.003907293081283569),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #19

(x = -0.020968398079276085),

(y = -0.024764444679021835),

(z = -0.018906205892562866),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #20

(x = -0.034380149096250534),

(y = -0.020498761907219887),

(z = -0.012047678232192993),

(visibility = 0.0),

(presence = 0.0)

),

Landmark( // #21

(x = -0.0340217649936676),

(y = -0.025126785039901733),

(z = 0.003296598792076111),

(visibility = 0.0),

(presence = 0.0)

),

],

])

);

实例代码(gesture_image.py)

(官方代码实例,有部分修改)

import mediapipe as mp

from mediapipe.framework.formats import landmark_pb2

from mediapipe.tasks import python

from mediapipe.tasks.python import vision

import cv2

import math

plt.rcParams.update({

'axes.spines.top': False,

'axes.spines.right': False,

'axes.spines.left': False,

'axes.spines.bottom': False,

'xtick.labelbottom': False,

'xtick.bottom': False,

'ytick.labelleft': False,

'ytick.left': False,

'xtick.labeltop': False,

'xtick.top': False,

'ytick.labelright': False,

'ytick.right': False

})

mp_hands = mp.solutions.hands

mp_drawing = mp.solutions.drawing_utils

mp_drawing_styles = mp.solutions.drawing_styles

def display_one_image(image, title, subplot, titlesize=16):

"""Displays one image along with the predicted category name and score."""

plt.subplot(*subplot)

plt.imshow(image)

if len(title) > 0:

plt.title(title, fontsize=int(titlesize), color='black', fontdict={'verticalalignment':'center'}, pad=int(titlesize/1.5))

return (subplot[0], subplot[1], subplot[2]+1)

def display_batch_of_images_with_gestures_and_hand_landmarks(images, results):

"""Displays a batch of images with the gesture category and its score along with the hand landmarks."""

# Images and labels.

images = [image.numpy_view() for image in images]

gestures = [top_gesture for (top_gesture, _) in results]

multi_hand_landmarks_list = [multi_hand_landmarks for (_, multi_hand_landmarks) in results]

# Auto-squaring: this will drop data that does not fit into square or square-ish rectangle.

rows = int(math.sqrt(len(images)))

cols = len(images) // rows

# Size and spacing.

FIGSIZE = 13.0

SPACING = 0.1

subplot=(rows,cols, 1)

if rows < cols:

plt.figure(figsize=(FIGSIZE,FIGSIZE/cols*rows))

else:

plt.figure(figsize=(FIGSIZE/rows*cols,FIGSIZE))

# Display gestures and hand landmarks.

for i, (image, gestures) in enumerate(zip(images[:rows*cols], gestures[:rows*cols])):

title = f"{gestures.category_name} ({gestures.score:.2f})"

dynamic_titlesize = FIGSIZE*SPACING/max(rows,cols) * 40 + 3

annotated_image = image.copy()

for hand_landmarks in multi_hand_landmarks_list[i]:

hand_landmarks_proto = landmark_pb2.NormalizedLandmarkList()

hand_landmarks_proto.landmark.extend([

landmark_pb2.NormalizedLandmark(x=landmark.x, y=landmark.y, z=landmark.z) for landmark in hand_landmarks

])

mp_drawing.draw_landmarks(

annotated_image,

hand_landmarks_proto,

mp_hands.HAND_CONNECTIONS,

mp_drawing_styles.get_default_hand_landmarks_style(),

mp_drawing_styles.get_default_hand_connections_style())

subplot = display_one_image(annotated_image, title, subplot, titlesize=dynamic_titlesize)

# Layout.

plt.tight_layout()

plt.subplots_adjust(wspace=SPACING, hspace=SPACING)

plt.show()

DESIRED_HEIGHT = 480

DESIRED_WIDTH = 480

def resize_and_show(image):

h, w = image.shape[:2]

if h < w:

img = cv2.resize(image, (DESIRED_WIDTH, math.floor(h/(w/DESIRED_WIDTH))))

else:

img = cv2.resize(image, (math.floor(w/(h/DESIRED_HEIGHT)), DESIRED_HEIGHT))

# cv_mat = cv2.imread(img)

cv2.imshow('Image Source', img)

cv2.waitKey(0)

img_list = ['./thumbs_up.jpg','./thumbs_down.jpg','./victory.jpg','pointing_up.jpg']

# Preview the images.

images = {name: cv2.imread(name) for name in img_list}

for name, image in images.items():

print(name)

resize_and_show(image)

model_path = '/Users/binchen/project/mediap/gesture_recognition/gesture_recognizer.task'

# STEP 2: Create an GestureRecognizer object.

base_options = python.BaseOptions(model_asset_path=model_path)

options = vision.GestureRecognizerOptions(base_options=base_options)

recognizer = vision.GestureRecognizer.create_from_options(options)

images = []

results = []

for image_file_name in img_list:

# STEP 3: Load the input image.

image_rec = mp.Image.create_from_file(image_file_name)

# STEP 4: Recognize gestures in the input image.

recognition_result = recognizer.recognize(image_rec)

print(recognition_result)

# STEP 5: Process the result. In this case, visualize it.

# 下面这是原来的第 5 步的代码

# images.append(image_rec)

# 直接使用这个是报错:

# ValueError: Input image must contain three channel bgr data.

# 所以下面的代码是先将图片文件按 three channel bgr 的格式来读取

image_show = cv2.imread(image_file_name)

image_base = mp.Image(image_format=mp.ImageFormat.SRGB, data=image_show)

images.append(image_base)

top_gesture = recognition_result.gestures[0][0]

hand_landmarks = recognition_result.hand_landmarks

results.append((top_gesture, hand_landmarks))

display_batch_of_images_with_gestures_and_hand_landmarks(images, results)

识别视频中的姿势(pose_video.py)

(官方代码实例,有部分修改)

识别裡视频中的姿势(pose_livestream.py)

(官方代码实例,有部分修改)